Case Studies

Procedural Home Decorations

One of the features of the mobile application of Houzz is "View in my room". This features enables the users to virtually see a realtime 3D model of the product they intend to purchase on their iPhone or Android device. There are literally millions of actual products in Houzz's database, transforming all of which requires a lengthy process and significant costs.

After joining Houzz I realized that many of those 3D products could be generated procedurally. Such as pillows, carpets, wall decorations, mirrors etc.

I designed an automated pipeline which generated more than 1.75 million procedural assets in less than two months. (This time could have been reduced to three weeks. The reason will be explained shortly). Assuming 20 USD per each model If outsourced, this pipeline cut the costs for more than 350,000,000 USD, not even considering the time factor.

This system was designed to minimize the human interaction as much as possible. The whole pipeline is orchestrated by Python. The first part, gathering the necessary data for the product, includes:

-

Connecting to Houzz's database API and finding the correct categories

-

Finding the products in the above category which don't have a 3D model

-

Acquiring and parsing the JSON data for every product

-

Refining the data and removing dependencies and anomalies

-

Export a CSV file

There are many dependencies in measurements and length units and sometimes those data don't simply exist! That is due to the fact that those information are usually entered into Houzz's database directly by the manufacturers of the products. Therefore I had to come up with a few algorithms to sift through data or extract data from the images of the products. Without this issue the the production could have been much faster.

Basically the only information needed for making each model is its image, width and height. The depth is guessed from the overall shape and the other two existing dimensions. These information is sent to Houdini for generating 3D objects based on the contour of the images. Sometimes the images are slanted and are straightening in Houdini before creating the object. The result is an optimized game-engine ready 3D model with clean topology and UV. A cleaned up texture is also generated as a bi-product.

These models and textures are then imported into to Maya and with Python. A Meta Node with holds the necessary information for Houzz engine is added. Also a material is assigned and exported as a JSON file. The scene is then saved as a Maya ASCII file (.ma). At the end all the generated files are now ready to get checked into Perforce and sent to the engine.

The image below shows the abstract model of the pipeline

An abstract representation of Houzz Procedural Asset Pipeline

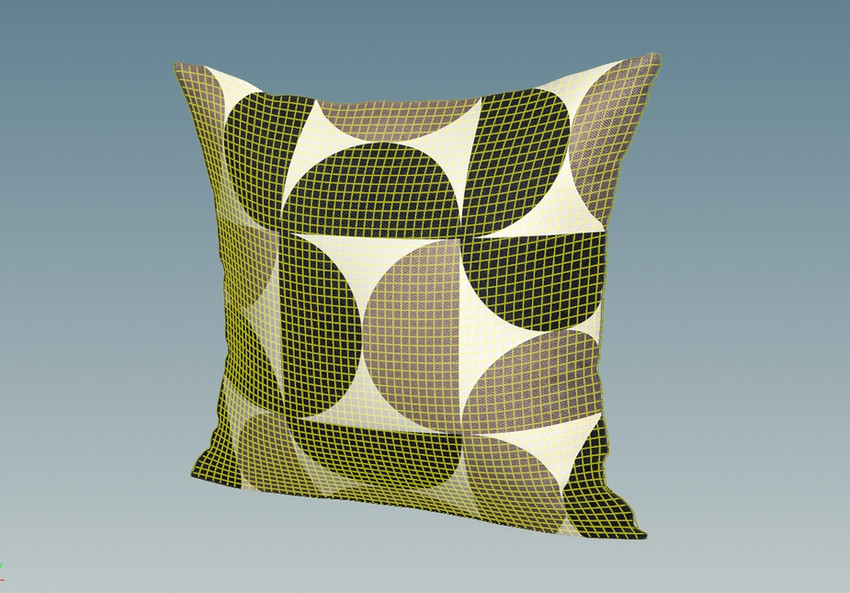

Below are a few samples of the 3D assets generated by this pipeline. As you can see the contour for each product is unique and follows the actual product's contour. The third image in each group is the photo of the actual product in Houzz website.

Procedural 3D assets generated with Houdini

based on actual products

Vanity Template Generator

Vanities are one of the most important categories in Houzz's marketplace, thus needed more attention. Because of the complexity and uniqueness of vanities, they are created manually. Every 3D asset starts with basic shapes which is the scaffold of the model. Creating a suitable base takes some time for the artists; beside the general resemblance measurements should be taken care of precisely.

I designed a procedural vanity generator in Houdini and shipped it to Maya with Houdini engine. This tool allows the artists to design a base 3D model by entering the measurements and tweaking parameters. Below are some samples each of which is created in less than five minutes

Procedural vanities: a Houdini digital asset shipped to Maya as a plugin

This tool is pretty flexible and can replicate most of the vanities on the market, even curved ones. One of the main challenges was finding a way for generating the drawer patterns. This tool makes it very easy for the artists to create a base grid and then connect the selected pieces with a simple click of the mouse. The drawers are also cut out from the body in real-time while the artist is using the tool. Each template could be saved as a preset for later use. This tool has the potential to be expanded to other products such as tables and desks, chairs, closets and shelves.

Art Pipeline Tools

One of the responsibilities of a technical artists is creating tools for artists to facilitate their work and save their time. Maya is the main 3D software of the art pipeline at Houzz, therefore I wrote most of the in-house tools for Maya and created toolbars and designed icons for them.

Proprietary Maya tools for Houzz art pipeline

Many of the mentioned tools saves artists time by cutting down the manual steps they have to take to get to the desired result. For instance in the first row of the toolbars in the image above, Houzz tools, there are buttons to check-in the scene including the textures, materials and the generated metadata to Perforce within a click. Other tools do some necessary background work that could not be possible without coding. For instance the Meta-Data node which carries all the information of the scene is not available manually.

The second row, Art Tools, beside the usual utilities there is an asset browser (Widget Browser) for browsing the commonly used models which appear in a lot of furniture, like knobs, faucets, handles etc. (hence the name). This tool which is created with PySide (basically a free version of PyQT, now embedded in Maya) also enables the artists to add the models they have created into the library with a simple click; an icon is then created on the spot and everything gets checked into Perforce.

The interface of the Widget Browser tool

Implemented in Python & PySide

There are material editing tools as well dedicated to creating Physically Based Materials (PBR) and accessing the materials files stored in the internal material library as JSON files. The artists are also able to add the materials they use the most onto their own personal toolbar.

The interface of the Material Browser tool

Implemented in Python & PySide

After a tool is written and used by artists for a while, they might find specific cases that might break the tool which was not initially predicted. Some other times they need to add more features to a certain tool. Updating and maintaining these tools are the ongoing task of a technical artist which have a lot of interesting challenges which lead to a better understanding of the system and the needs of the artists.

Procedural tools for PGA Tour

The need for procedural content creation for games is increasing more than ever. The is the result of growing demand for generating more assets in less amount of time with more control and the ability of reiterating their production. Recently more artists and technical artists are recognizing the real value of procedural content creation and therefore trying to learn, expand and embed that workflow in their pipelines. EA is not an exception. It could easily be observed that how many procedural tools have been created over the last few years.

With the fast transition of the golf game (PGA Tour) to Frostbite, procedural methods became a necessity for generating or regenerating many of the assets one of which is the Terrain. Generating terrain has always been one of the main concerns for the PGA Tour as it is the core of the game; so is the Vegetation. Also some fantasy courses required procedural Rocks. These are an example of tools in Houdini that are developed for the PGA Tour game which are explained here.

Many assets in PGA Tour game were created procedurally

Terrain Heightmap Generator

Fig. 1: Frostbite Terrain created from the Heightmap generated by Houdini

Previously on Gen3 games, the terrain was manually created as polygonal meshes. Frostbite’s terrain on the other hand are created from a height map which is a 16 bit per channel black and white image (with black for the lowest and white for the highest altitude) containing the elevation data of the land (Fig. 2). This image could be created manually or from existing data. At the moment we have two different sets of data for generating those height maps: Gen3 Meshes and Scanned Point Clouds.

The base of the workflow is defining the bounding box of the meshes, finding the height of each point in the scale of 0 to 1 comparing to the minimum and the maximum of the bounding box and translating that number into a gray color from black to white (Fig. 3).

Fig. 2: Heightmap generated by Houdini

Fig. 3: The minimum and maximum heights are mapped into a color between black to white

Gen3 Meshes

Gen3 meshes need some preparation in order to be used in the Heightmap. Previously each course was consisted of different holes each of which had a separate mesh. The first step is gathering all those separate holes into one single terrain mesh and placing it in a suitable coordinate at the origin of the 3D world (Fig. 4). Then that mesh is exported as an alembic file. There are also a lot of curve networks which are necessary for creating masks and other calculations and needed to be sent to Houdini and will be explained shortly.

The water meshes should be a separate object in the Maya scene as it needs some extra manipulation. Gen3 water meshes were flat surfaces and the depth was faked in the shader. In Frostbite the bottom of the water should exist as actual data. The main parameter used in calculating the depth for the water meshes is the distance from the shore which is then mapped into a curve (Fig. 5).

Fig. 4: Gen3 Mesh

Another factor is the retaining walls. Wherever there are walls around the water, the depth drops abruptly. To calculate this sudden change more steps should be taken. First the distance from the wall curves should be calculated. There are cases that two bodies of water are very close to each other and only one of them has a wall and therefore that curve will affect both bodies which leads to undesirable results. In order to fix that we have to check whether a certain point on the wall curve “sees” a certain point on the water mesh or not and for that the binormal vector of the wall curve is needed.

Fig. 5: Bottom of water pond is calculated from the distance from the edges and retaining walls

The binormal of the curves are depending on their direction. But it is a tedious task for the artists to manually correct of the wall curves. In order to fist the direction of the wall curves first the edges of the water are extracted as right handed closed curves, their normal vector which is pointing toward +Y. Because the curves are right handed the tangent and binormal vector are the same among all of them and could be used as a standard for calculating the binormals of the wall curves. Each wall curve will look for the binormal vector of the nearest point on the water edge. If the curve’s binormal at that certain point is the same as that near point’s binormal it means that the direction of the wall curve is correct otherwise the curve needs to be reversed (Fig. 6)

Fig. 6: Using a curve with correct direction (the white curve) to correct the binormal vector of another one (the red curve)

Now that the wall curves are corrected we need to determine where on the bodies of water are affected by the wall curves. In order to do so a vector is calculated by subtracting the point on the water and the nearest point on the wall curve. Then the dot product of that connecting vector and the binormal of that near point on the curve is calculated. If the dot product is negative it means that that point on the wall curve does not “see” that point on the water body and therefore does not affect it; i.e. it cancels the depth calculation and turns it into zero (Fig. 7).

Now that we have the natural depth and the sudden depth we can get the minimum of those two and find out the depth. A smoothing level could be applied if needed in order to create a more organic look. Some water meshes are very simple and need to be subdivided a few times before it could be used for the depth calculation process.

Fig. 7: Wall curves affecting the water mesh

Fig. 8: Procedurally generated water depth. Notice the blending between the areas with and without walls

Laser Scanned Data

The scanned data are really condense and heavy and therefore not really suitable. Also we need geometry in order to render the height maps. Triangulating those data take a very long time and it is not very convenient. The main software used for handling, cleaning and preparing point cloud data is Leica Geosystems HDS Cyclone. This software (which is shipped with Leica’s laser scanners) is used for processing, registration and editing point cloud data. One of the main feature of Cyclone is the ability of averaging point cloud in the form of a grid mesh. These meshes are used for creating the Heightmap. The problem with this type of mesh is that it does not have radial edge flow and therefore creates lots of artifacts around sharp corners and where there are sudden changes in the undulation (Fig. 9 - 1).

In order to overcome that problem, those areas are recognized and re-meshed (Fig. 9 - 2). Other areas such as bunkers and water edges which need more precision could be replaced by curve that was mentioned before. Those curves are triangulated (for creating points on their surface) and projected into the mesh. For the water depth the same calculation as the Gen3 meshes are used.

Fig. 9 - 1: Meshes generated from scanned data have artifacts on steep areas

Fig. 9 - 2: The steeps areas are recognized and re-meshed

Masks and Mow-lines

Masks are used in Frostbite for defining different areas on the terrain. These areas are mainly used for texturing layers. In order to create these masks, closed curves are needed. The curve networks that was mentioned before are used in this case. There are different ways to bring curves into the tool. Unfortunately Alembic files do not support curves very well. Also FBX files could be a little problematic. A small Python script is written for exporting the selected curve networks in Maya into a custom ASCII format which is later parsed with Python in and regenerated in Houdini (This method might change later into better solution such as parsing the “.ma” files which are ASCII and therefore easily parsed). By default all the curves are closed (except wall curves) and separated into different groups based on their name and groups in the Maya scene. From there it is pretty easy to render each one separately with a constant which color (Fig. 10 - 1).

Some areas of golf courses are covered with grass and usually mowed in certain directions. These areas are Fairway, Tee box and Green. The direction of the mowed grass can be manifested in the shader and later in the direction of the grass strands. The tool uses the same curves as the masks to create Mow line textures. In order to save memory, it is decided to export the direction into only one color channel. But for recreating a vector from a texture we need at least two values. For solving this problem the amount of bending on the grass strands are supposed a constant value the direction is calculated from mapping the angle which ranges in the interval of [0, 360] degrees in the trigonometric circle to the interval of [0, 1]. In this case the value of 0 (or black) and 1 both means +X (or east), 0.25 means +Z or north, 0.5 is –X or west and so on. Another problem is that it will be very hard and inconvenient for the artist to manually change the angle of each piece. The best way to tackle this problem is that to calculate a default value for the direction of the mow lines on each piece and offer the artist the freedom of shifting these values from -90 to 90 degrees. This default direction is calculated by finding the longest axis of each piece (Fig. 10 - 2).

Fig. 10 - 1 (top): Colorized Masks

Fig. 10 - 2 (bottom): Mow-lines

RockOn! Procedural Rock Generator

Fig. 11_1: A procedurally generated real-time rock

One of the challenges of environment modeling artists is creating organic and realistic rocks. Based on the complexity of the rocks, modeling of them can take from a few hours to two days. Other difficulties are creating a suitable texture and different Level of Details for them. RockOn! Is procedural a tool which generates convincing rock models (which could be used with no further modifications) or good base models for artists to start with. The interface is pretty easy to use and the parameters are pretty simple and optimized

Fig. 11_2, 3: The product of the tool used in the game

The tool works with multiple layers of displacement which could be turned off or on and adjusted with parameters. The base model of this tool is a simple 4 x 4 x 4 cube which is projected into a sphere. The reason for using a box is avoiding poles. If the user wants rocks with sharp edges the main shape (the first layer of displacement) is created based on Worley Noise. In this noise algorithm a series of points are randomly scattered on a surface (or in space). Then for every point of the surface the function finds its distance to the nearest point from those scattered points. If the desired rocks are rounder with no sharp edges, Perlin noise is used with only 2 levels of turbulence (less details). The user also has the ability to blend between these values.

Fig. 12: All LODs, normal map and textured rock are created at once in a few milliseconds

For The second layer of displacement another Worley noise pattern is used, but with a higher frequency and a lower magnitude. The third level (the mid-range detail) is generated with another Perlin noise with clamped values. The fourth and fifth are also Perlin noises with lower and higher frequencies. Cracks are made with Voronoi noise; but instead of directly feeding the noise with the point positions of the surface, the point positions were first fed into a Perlin noise, and then into the Voronoi noise and then some further calculations were added. The LODs are generated simultaneously, but hidden from the user. For the sake of speed those calculations happen only at render time (Fig. 13).

Fig. 13: The ingredients to make a rock

For projecting textures onto the surface box mapping gives the best results. The biggest issue about box mapping is that it creates visible tiles. Another method of box mapping is used in this case which is called Blended Box Mapping. Basically it is consists of 3 perpendicular planner mappings which blend together based on the direction of the normal of the surface on each point. The advantage of this method is that it not only creates no seams, but also even non tileable textures could be used and the final texture will be with no seams. This method is called Blended Box Mapping (Fig. 14).

Tangent-space normal maps are generated based on the difference of the normal vectors of the 5th and 7th levels of detail models. For every point on the 5th LOD, a ray is shot toward the 7th LOD along the normal vector and that ray reads the normal vector of the 7th LOD. The new normal is then transformed into the tangent space. To do so first a suitable normal - tangent – binormal frame should be created. Every point on the surface has only one normal vector, but infinite tangent and binormal vectors. If the surface is parametric, u and v directions are already embedded into it.

Fig. 14: Blended Box Mapping on a sphere

But for polygonal surfaces we have to use the UV space. The problem is that u and v are only one number which does not provide us enough information. Therefore the rate of changes on each direction (u and v) could be used for each point on the surface. Houdini already provides such variables: dPds and dPdt. The problem with using these variable is that first they work only for shaders, second they are based on polygons and therefore the results they create are very faceted. The best substitute is Polyframe node which can provide normal, tangent and binormal based on the texture coordinates. Now that the perpendicular tangent frame is generated, the normal form the 7th LOD could be transformed into this frame by multiplying it to 3x3 matrix consists of normal, tangent and binormal of the 5th LOD surface. After creating the normal map and diffuse map, the ROP network exports them as textures using the original spherical UV layout (Fig. 15).

Fig. 15: Diffuse and Normal textures generated by the RockOn! tool

Tree Branch Texture Generator

Fig. 16: Tree with twig and leaf textures generated by Houdini

Vegetation is another important element of the Golf game. Plant factory is a great software that generates convincing plant and tree models. One of the main issues with this software is that it does not provide an orthographic camera with precise coordinates so that the user can create textures for branch and twig clusters. Therefore Houdini is used to create diffuse, normal, specularity, roughness, sub-surface scattering and ambient occlusion texture maps for those branches with Branch Texture Generator tool.

Fig. 16: Close up of the tree cards with branch textures

First the artist needs to import those assets from plant factory into Maya or any other 3D package to arrange them based on desired UV layout. Those models should be confined in a square area from -1 to 1 (in X and Y). That model then is exported as an Alembic file. The tool in Houdini reads the file and extracts the information of different parts of the model (barks and leaves). Using these information correct decisions are made about the material and textures. Also the UI updated dynamically based on those data. Although the tool takes a good guess about the path and the name of the texture files, the user is able to choose different textures for different parts.

Fig. 17: Shader graph for Tangent Space Normal Map creation

For the diffuse texture a simple constant shader is used which reads the color and alpha map from the texture file and correctly populates them on different parts of the model. For the specularity / roughness / subsurface scattering maps it is a little more complicated. Each of these values are stored in one channel of a single texture. The bark is dense and therefore the SSS is not applicable to it. But for the leaves the blue channel of the texture is used to calculate the transparency and saves those values on the blue channel of the transparency. For generating the normal map, first the user needs to input the normal map texture. These values are read and transformed into the object’s tangent space with the same method used for the RockOn! tool.

Fig. 17: Diffuse, Normal, Spec/Roughness/Translucency, and Ambient Occlusion maps